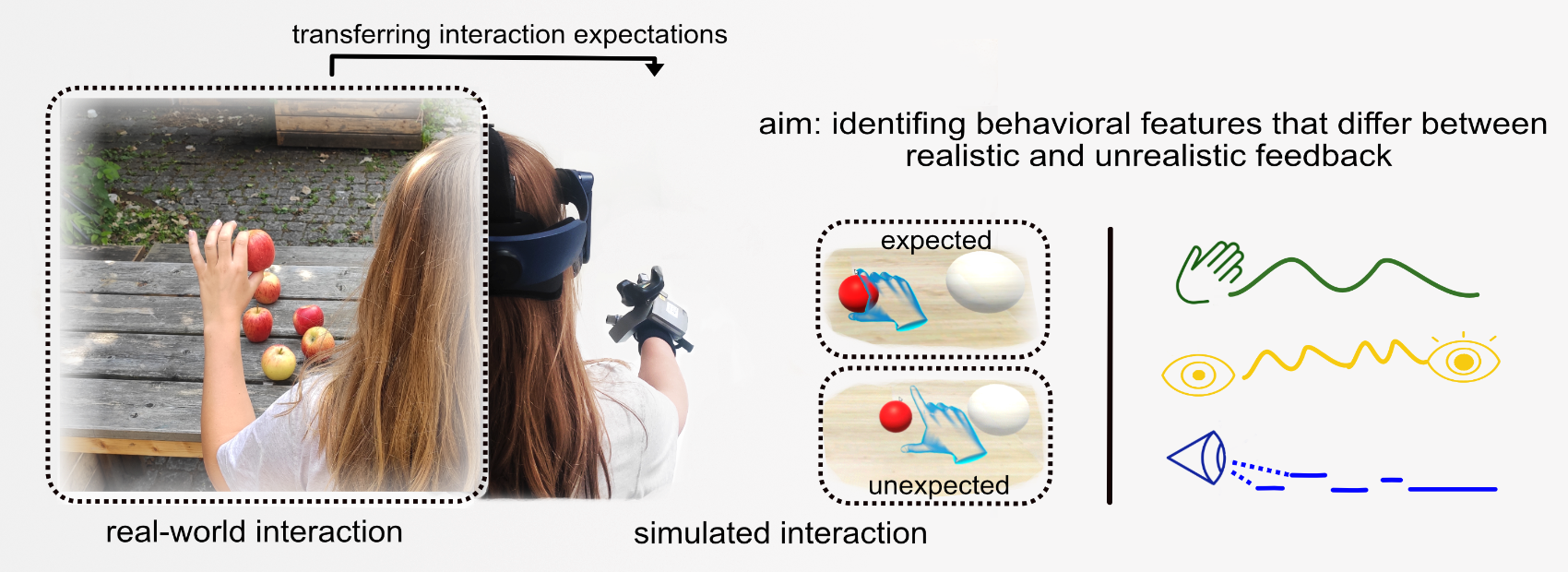

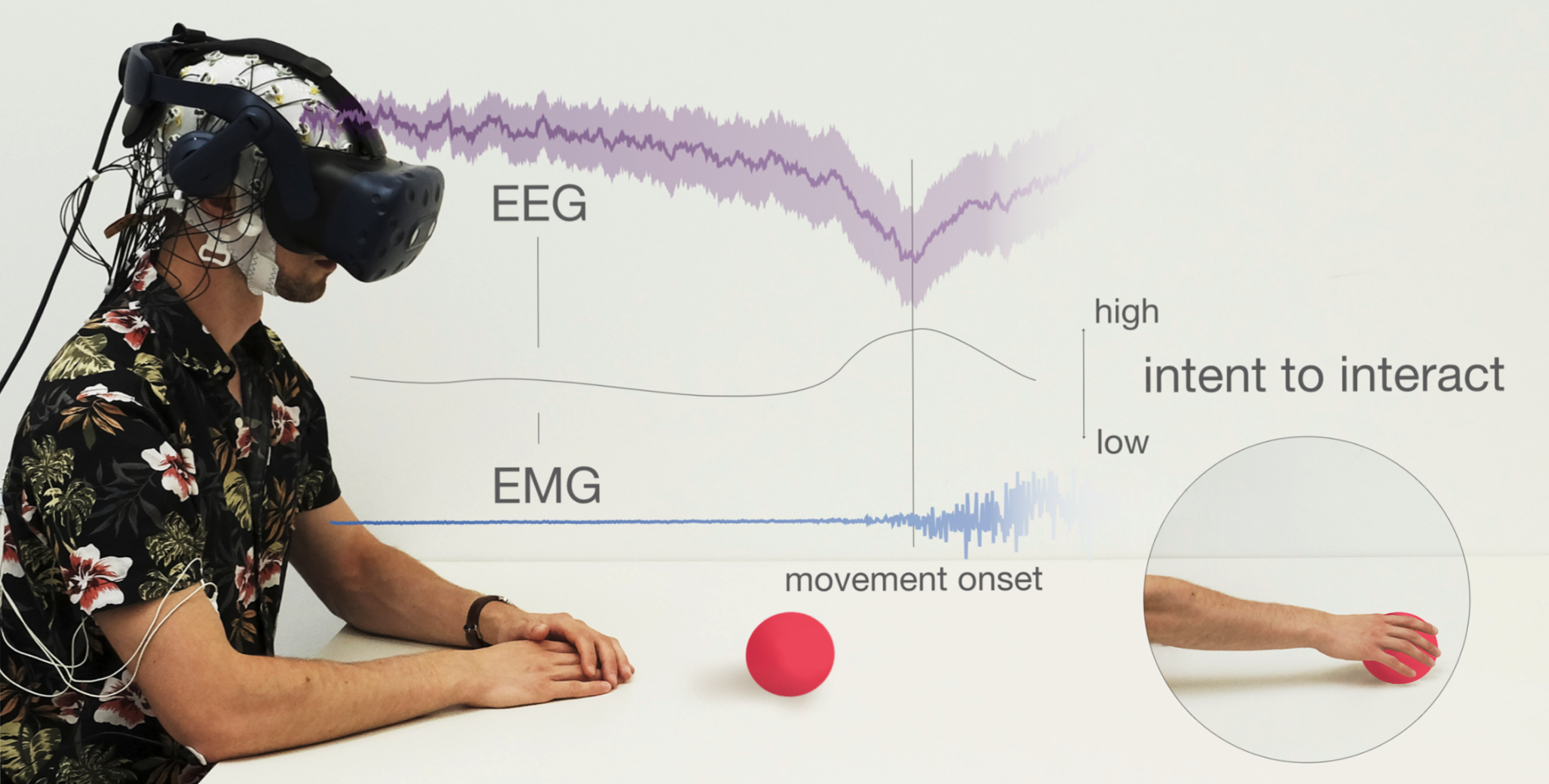

I work at the intersection of human-computer interaction (HCI) and cognitive neuroscience. In my PhD research, I published papers on multimodal interface technology, investigating neural- and movement signatures for novel natural interaction experiences with extended realities (XR).

news

| Oct 16, 2024 |

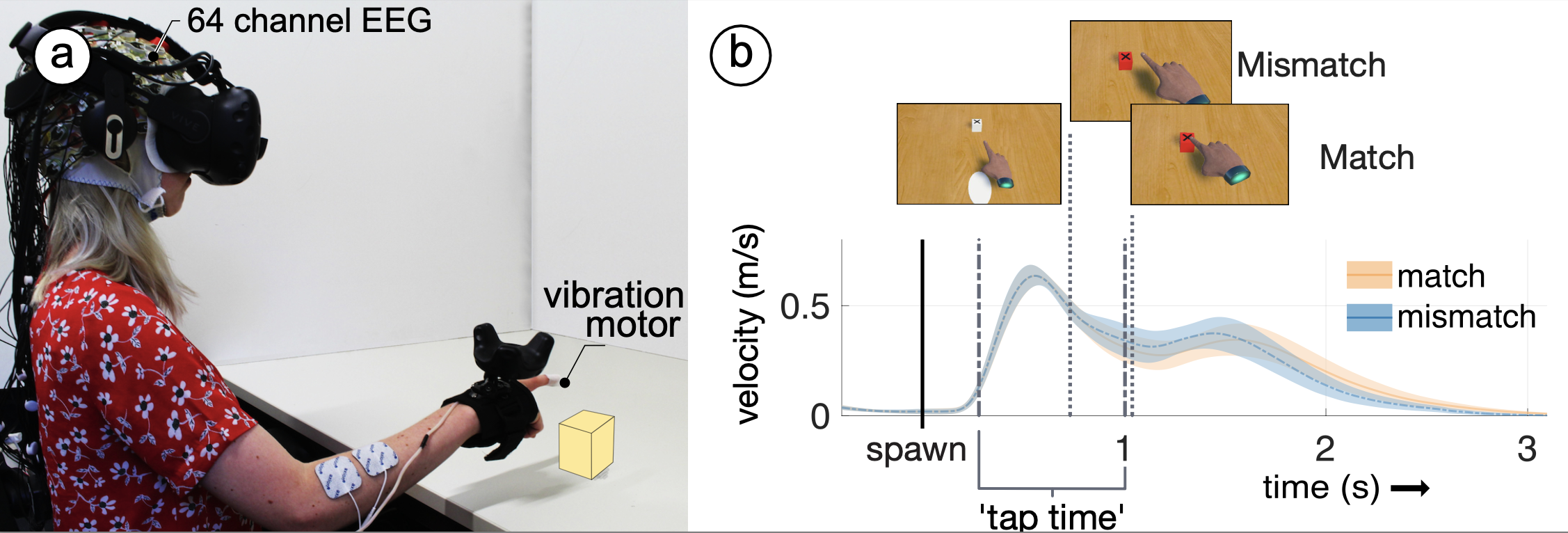

With Martin Feick at UIST 2024 in Pittsburgh. Martin presented our paper “Predicting the Limits: Tailoring Unnoticeable Hand Redirection Offsets in Virtual Reality to Individuals’ Perceptual Boundaries”. See a brief demo video here:

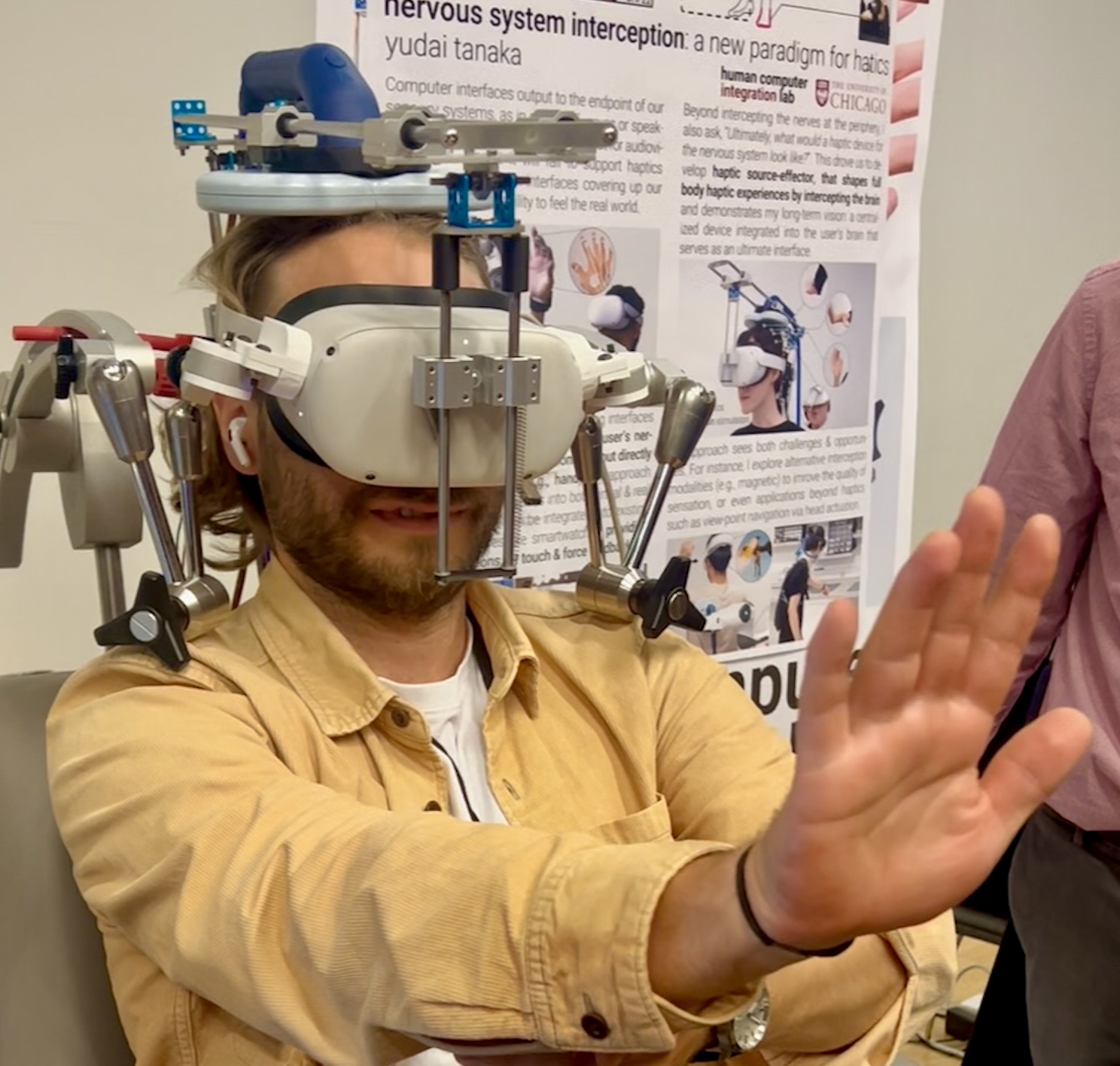

Fun VR demo using TMS to simulate haptic experience directly in the brain :).

|

|---|---|

| Sep 27, 2024 |

Invited to VR Summit Bochum and helping out with the hackathon. Was a fun event with a great lineup of speakers.

|

| Jul 24, 2024 |

Intense three days of discussions about presence experience in VR and how, if, it can be measured. We were super lucky to have the Einstein Center for Digital Future host our meeting. Planning to write up a report of the meeting and the findings of the discussions.

|

| Jul 12, 2024 |

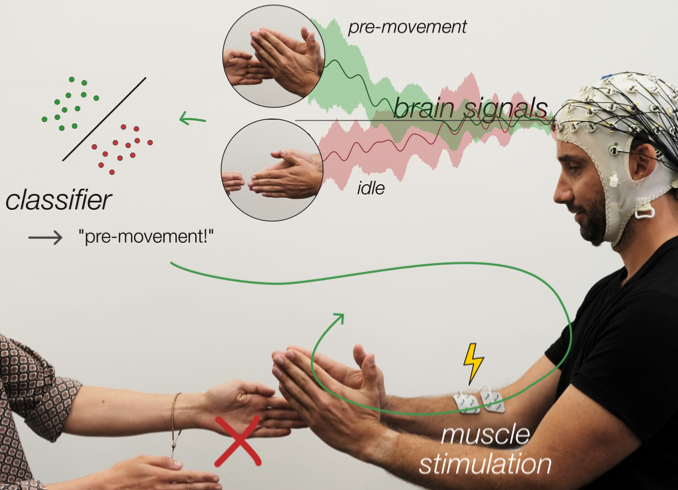

Neuroergonomics conference in Bordeaux was good fun and came with a surprise. I was awarded the Raja Parasuraman: Young Investigator Award sponsored by BIONIC at Neuroergonomics 2024 conference for the paper “Agency-preserving action augmentation: towards preemptive muscle control using brain-computer interfaces“. The paper, which I wrote with Leonie Terfurth and Klaus Gramann is on arXiv now!

|

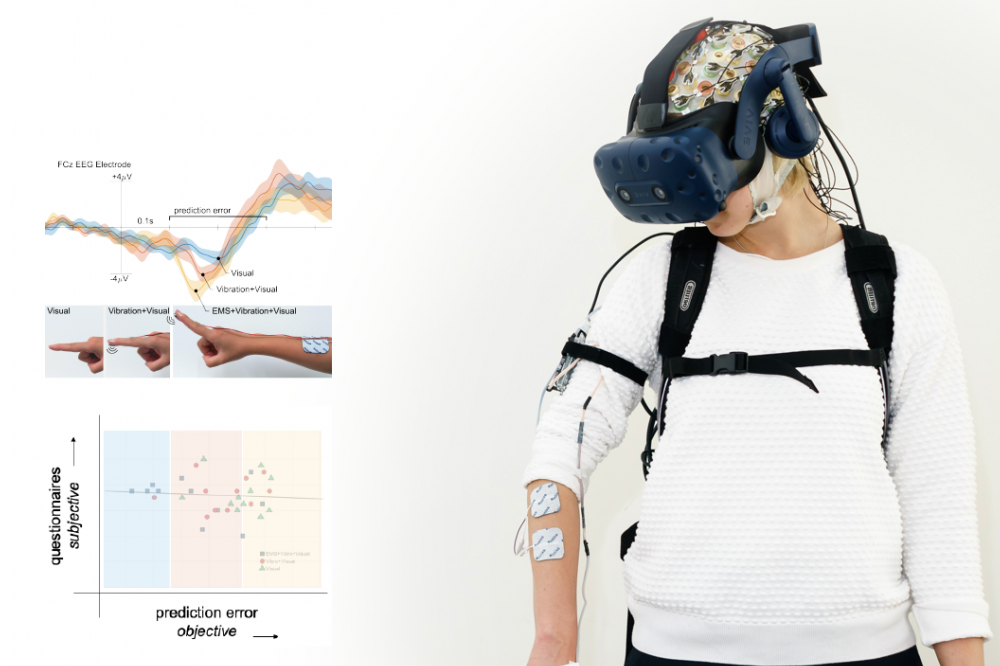

| May 06, 2024 | I now published the data we reported on in two earlier two publications at CHI and in JNE. The data is available on openneuro and the detailed specification can now be found in Frontiers in Neuroergonomics. |

| May 03, 2024 | Happy to announce a workshop on defining outstanding challenges in measuring the experience of presence in XR. More information can be found here. |

| Apr 10, 2024 | Workshop on BIDS at Neuroergonomics 2024: Together with Sein Jeung, we will be teaching a workshop on the BIDS (Brain Imaging Data Standard) format. More information can be found here. |

| Mar 20, 2024 |

Tried out some VR interfaces in the demo session, was really impressed by some smell and heat rendering interfaces.

|